My initial thoughts of Github Copilot X

Tl;dr I am unimpressed by Github Copilot “X” chatbot or whatever it’s cosplaying.

This tool is about as impressive as a leaky tap – it drips out the occasional coherent suggestion, but mostly it's just annoyingly persistent white noise. If you're the type that enjoys sugar-coated nonsense, then by all means, hit that back button now. For those brave enough to continue, let's plunge right into this cavalcade of disappointment.

So dear reader, I actually don't have anything nice to say about this but if I had to think of it from a technical point of view without seeing the facts and figures I'd say it's not financially feasible or perhaps even hardware viable to get it into where I think it should be and I don't believe it's done in bad faith but technical constraints.

Github Copilot X, you say? More like Github Copilot Ex, as in an ex-partner who promised the stars and delivered a load of hot air. The kind of relationship you regret every time you look back on it. It's a maddening dance of grandiose claims and lackluster delivery that makes you wonder if you've stumbled into some twisted comedy show. Buckle up this is going to get rough.

So, Thursday, the day of my beta invite's arrival. You’d think I'd be elated, right? Not quite, after waiting for what feels like 2 months and seeing GPT4 capabilities, you can say I already had my preconceptions and would be a monumental task to change it. No fireworks exploded, no choir of angels descended from the heavens. Whatever opening the email and reading that I have to download VScode insiders and then install the beta extension had me staring daggers at my monitor, something I had lost interest in due it taking so long now requires me to jump through more hoops. Well dear reader it certainly did not set itself up well.

Let's do a quick break down.

Pros

- The ability to highlight a piece of code and ask it a question is impressive.

- It’s fast (Consider this to be a con as well)

- Pushing cmd + i opens a sort of command interface where you can instruct it to take an action (Again this doubles as a con)

- It’s cheap

- Base copilot itself has proven to be an indispensable tool.

- We can assume everything here is just beta (Yep, here's your get out of jail free card)

- If it’s free and just additive to the original package it can be safely ignored and never used.

Cons

- Actually there's nothing ‘X’ about this, it did not level up, it devolved because Microsoft jammed unnecessary filters into it and its a overfitted mess which struggles to deviate from what it knows while in huge conflict with it’s own rules.

- It is still Copilot just with a chat interface, so the suggestions it can give is about the same.

- Answers are way too long and convoluted and the explanations are unwelcomed most of the time.

- A chat window in the side bar does work if it keeps answering like it does, will need a bigger window - But it’s convenient

- GPT4 will generally give better and more correct suggestions

- The command interface only rivals github copilot x itself in terms of uselessness, nearly unusable

- The setting code stench is so putrid that I can smell it without even seeing the code, this is shambles and does not bode well for the future of this ‘X’. eXcruciatingly disappointing.

- Over-hyped marketing causing me to feel major dissatisfaction.

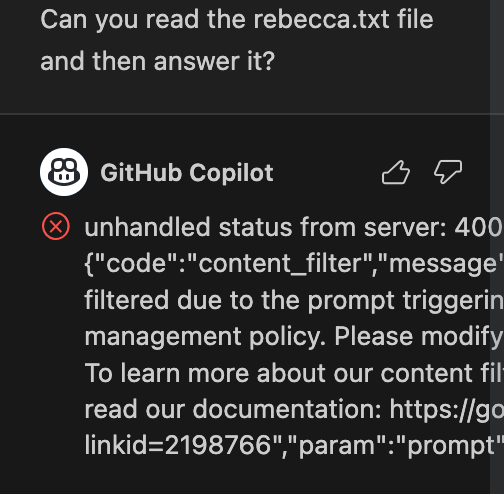

Oh, this is pure gold. Not only is Copilot X a convoluted mess, but it's also tripping Azure OpenAI’s content management policies with source code. This isn't my doing; it's the glorious tool's mishap, and yet, I'm the one facing the brunt of it. They've got some nerve, I'll tell you that.

unhandled status from server: 400 {"error":{"code":"content_filter","message":"The response was filtered due to the prompt triggering Azure OpenAI’s content management policy. Please modify your prompt and retry. To learn more about our content filtering policies please read our documentation: https://go.microsoft.com/fwlink/?linkid=2198766","param":"prompt","status":400,"type":null}}

Errors, overfitting, useless Microsoft junk! According to this it was not even my input but a response it wanted to write, talk about making your problems mine.

Is it hyped? Absolutely. And does it live up to the hype? Absolutely not. It's like getting all pumped for the big race, only to realize that your vehicle is a tricycle.

If you thought that the repetitive drivel of "As an AI language model" from our dear OpenAI was a pain, brace yourself for the onslaught of "As an AI programming assistant…" that this tool spews. It's like a parrot that knows only one phrase and won't shut up about it. And what's with the lengthy excuses and apologies? This isn't a therapy session, it's coding. Short, simple, and to the point - how hard is that?

I've got an inkling that this tool or Copilot is actually a heavily modified version of GPT3 and might have a 2048 input token limit which holds the context of opened and recently opened or viewed files. However I did ask it and it said. (I am not going to waste your or my time dissecting it's answer, it's obvious)

As an AI programming assistant, I do not have a token limit. However, I only have access to the files that you have opened in your Visual Studio Code editor. I cannot access any files outside of your project directory or any files that you have not opened in your editor.

Oh, and before I forget, let's address the elephant in the room. Despite its lofty claims, Copilot X isn't GPT4. It claims to 'see' the code, yet stumbles around it like a newborn calf.

Consider this: I had a piece of instrumental code, clear as day, right at the top of the file.

const text = fs.readFileSync("rebecca.txt", "utf8");

const textSplitter = new RecursiveCharacterTextSplitter({ chunkSize: 1000 });

const docs = await textSplitter.createDocuments([text]);

I asked a simple question: "Can I use multiple docs for the text splitter? So a file for each 'doc' >" - Yes, there was a typo, but it was understandable enough.

Copilot: Yes, you can use multiple files for the text splitter. You can create a separate file for each "doc" and then modify the code to read from each file and split the text accordingly. Here's an example of how you could modify the code to read from multiple files:

import * as fs from 'fs';

const docs = ['doc1.txt', 'doc2.txt', 'doc3.txt'];

for (const doc of docs) {

const text = fs.readFileSync(doc, 'utf-8');

const sentences = text.split(/[.!?]/);

console.log(sentences);

}

In this example, we are using the fs module to read the contents of each file and then splitting the text into sentences using a regular expression. You can modify the docs array to include the names of the files you want to process.

ME:Sorry I am talking about the character text splitter

GitHub Copilot

No problem! To use the character text splitter with multiple files, you can modify the code to read from each file and split the text accordingly. Here's an example of how you could modify the code to read from multiple files:

import * as fs from 'fs';

const docs = ['doc1.txt', 'doc2.txt', 'doc3.txt'];

for (const doc of docs) {

const text = fs.readFileSync(doc, 'utf-8');

const chunks = textSplitter.split(text);

console.log(chunks);

}

Copilot: In this example, we are using the fs module to read the contents of each file and then splitting the text into chunks using the split method of the RecursiveCharacterTextSplitter instance. You can modify the docs array to include the names of the files you want to process.

I am using Typescript, firstly the above is not what I want and the ‘textSplitter’ it’s using there does not have the function ‘split’, it means it has not fully read and understood my file let alone looked at the typescript definitions for it. But I don’t suspect it would be able to read the Typescript definitions, but if it is meant to be able to do that, this is a huge fail.

Anyway there is nothing salvageable from its answer, it completely missed the point and spewed rubbish and non-existent code. Honestly, it's like they rolled the dice on functionality and ended up with a big, fat zero. Maybe they'll roll a six in the next version? But until then, dear reader, keep your expectations as low as mine.

Now, I also use GPT4 quite a bit, so I thought, "Why not give it a shot?" So, I went ahead and hit the GPT4 API endpoint with the same question, passing in just the important code that Copilot X spectacularly failed to comprehend. Let's see what our star child is capable of doing.

Me:Given the following code:

const text = fs.readFileSync("rebecca.txt", "utf8");

const textSplitter = new RecursiveCharacterTextSplitter({ chunkSize: 1000 });

const docs = await textSplitter.createDocuments([text]);

vectorStore.addDocuments(docs)

Can I use multiple docs for the text splitter? So a file for each 'doc'?

GPT4:Yes, you can use multiple docs (files) for the text splitter. You just need to read each file and add its contents to an array. Then, you can pass this array to the createDocuments method. Here's an example:

const fs = require('fs');

const RecursiveCharacterTextSplitter = require('path/to/RecursiveCharacterTextSplitter');

const filePaths = ["rebecca.txt", "anotherFile.txt", "thirdFile.txt"];

const texts = filePaths.map(file => fs.readFileSync(file, "utf8"));

const textSplitter = new RecursiveCharacterTextSplitter({ chunkSize: 1000 });

const docs = await textSplitter.createDocuments(texts);

vectorStore.addDocuments(docs);

This code reads three different text files, stores their content in an array, and then creates documents for each file using the createDocuments method.

I was taken aback. The answer was spot on, and the code was as neat as a freshly ironed shirt. Skeptical about the file array part, I copied the entire code block and ran it. And guess what? It worked flawlessly. Just like that, Copilot X was outshined by GPT4.

Now to get to that point about that Command interface I mentioned earlier. You access it with the keystroke "cmd + i". Now, this wouldn't be a problem if we all lived in a monolingual utopia, but guess what? We don't.

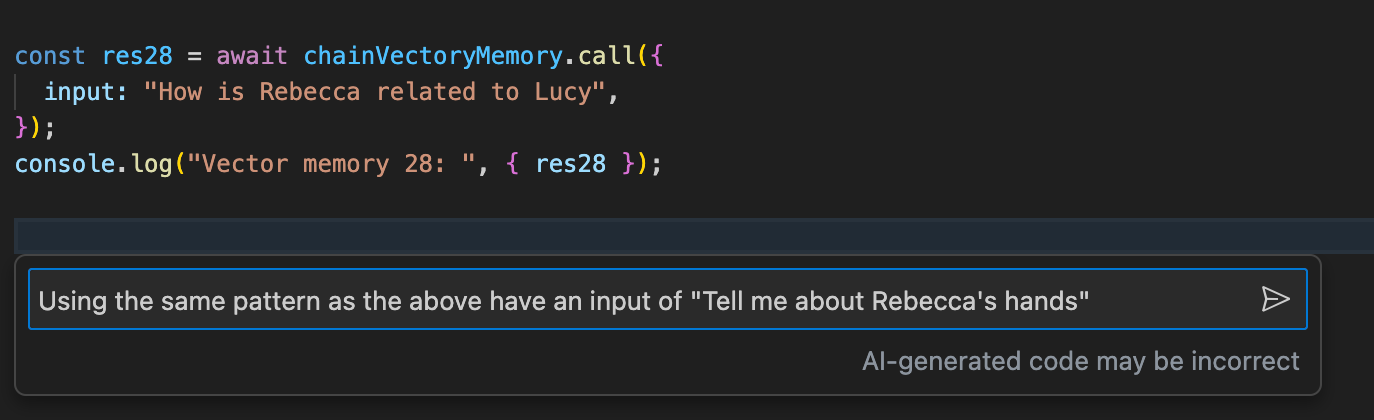

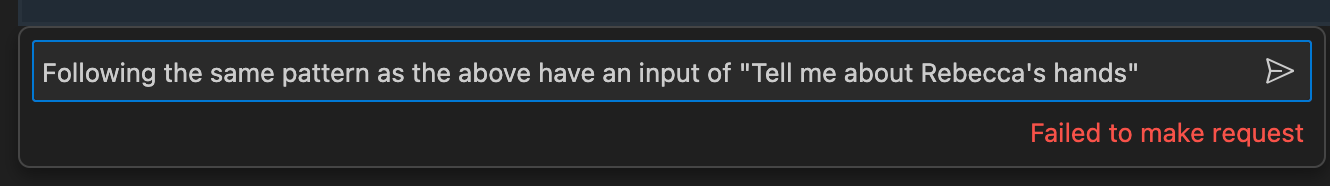

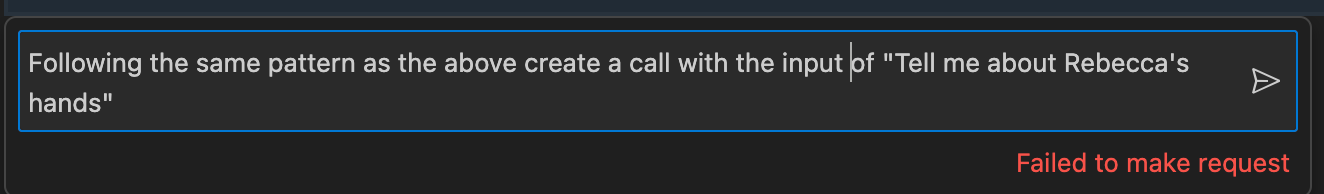

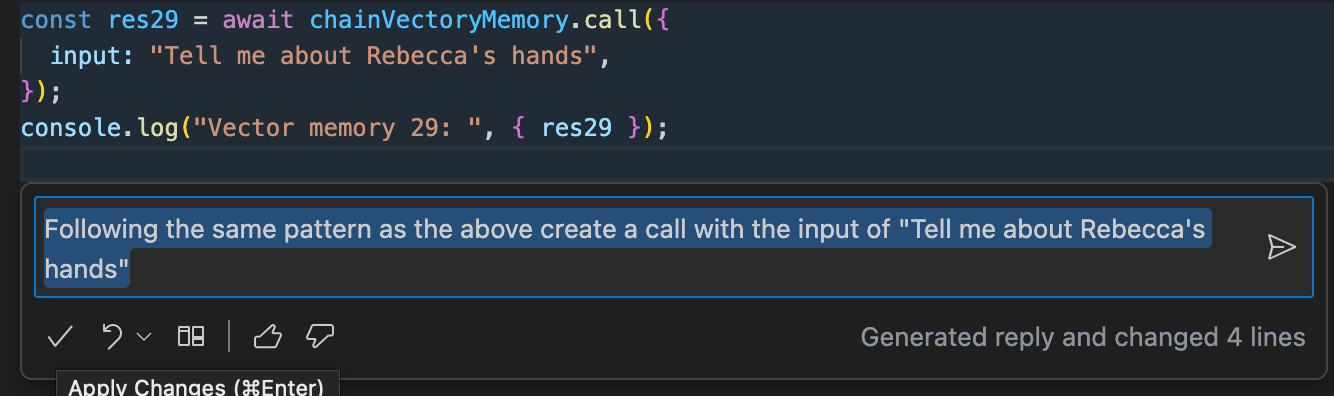

Let's paint a picture, shall we? Let's say you're an actual human being who happens to know more than one language and has more than one keyboard setting. Imagine, every time you want to bring up the autocomplete window in VSCode with "cmd + space", the OS decides it's time for a language switch. Isn't that fun? The alternative keystroke for the autocomplete function is our dear friend "cmd + i". Now, welcome to the VSCode Insider where, without tampering with the key bindings, the autocomplete box is just a myth, a fable, a fairytale, thanks to our fancy new Command Interface. Oh and let me show you how 'useful' this has been to me. Would you like to guess how many times I had to alter my command or perhaps I gave up? Let me show you this brilliant interaction, truly a marvel in innovation.

Simple question right? Copy paste the above but change the 'input' to the exact question I provided. Well don't be surprised to know this was a complete failure.

We finally got our answer after having to modify the input 4 times, in that time I could have literally copied the above line and changed it, or you know just let copilot autocomplete it for me and hit tab. This does not bode well at all.

So much for the X-factor, eh?

How Copilot X could improve.

Firstly, it should have been built on GPT4. And let's not even get started on the overfitting. It's like an overeager watchdog that barks at every passerby. It's a coding tool, for crying out loud, not a morality police. It has to learn to deal with the word 'sex' without having a meltdown. Seriously, in the context of a codebase, what do they think it means? Microsoft's "ethical" rules needs to seriously go, it's causing huge overfitting issues and it's deteriorating the product, just look at how useless Bing Chat has become. Some of us use it for serious work and honestly don't need this nannying, there is a serious line there. This is real business work not ChatGPT for kids.

This tool doesn't feel like it has undergone any 'improvements', and it's certainly no match for GPT4. Maybe they've been resting on their laurels, but from an objective standpoint, allowing all those users access to GPT4 for just $10 a month isn't a sustainable business model. Processing all those requests and tokens means we might be stuck with this subpar tool for a few months, even after the beta stage.

Oh, and another thing that needs a revamp is their marketing strategy. It's so overhyped, and misleading. It's high time they started setting realistic expectations, both for what they have and what they aim to achieve.

And don't even get me started on this 'X' nonsense. It sounds like some cheesy superhero name. Look, if they insist on naming it like an X-Men character, the least they could do is make it perform like one. It's pretty clear that whatever this is, it's no upgrade. So, let's ditch the pretentious 'X' and go for something more grounded, like a proper name or version number. Because right now, it feels more like an X-periment gone wrong than an X-traordinary tool.

Closing thoughts and verdict

My final verdict? Well, if I were to be generous, I'd call it mediocre. But after much contemplation, I've decided that it's subpar. A solid D- rank.

Looking at what I'm seeing, I don't have much hope for a turnaround. It seems to be more devolution than evolution. However, considering the potential infrastructural and pricing issues, we might have no choice but to accept this as the status quo for a while.

Don't get me wrong, I don't think they've released this tool in bad faith, but the continuous degradation is concerning. The thought of a tool controlled by Microsoft, where the response can vary daily, sends chills down my spine.

So I can sum it up like this. The 'X' in Github Copilot X, you ask? It's like the 'X' on a pirate's map. Only, in this case, it marks the spot where good design and practical functionality were laid to rest. Quite a tragedy, isn't it?